Smart Automation of Trial and Error to Beat Cancer Sooner

Jonas Bermeitinger

Affiliation: LABMaiTE GmbH, Freiburg im Breisgau, Germany

DOI: 10.17160/josha.11.6.1016

Languages: English

Why We Need to Relearn How to Talk to Machines - A Snapshot of Generative AI in January 2024

Maria Kalweit, Gabriel Kalweit

Affiliation: Collaborative Research Institute Intelligent Oncology (CRIION), Freiburg, Germany

DOI: 10.17160/josha.11.2.977

Languages: English

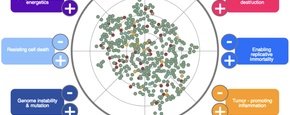

AI as an Always-available Oncologist: A Vision for AI-optimized Cancer Therapy Based on Real-time Adaptive Dosing at the Patient Level

Gabriel Kalweit, Luis García Valiña, Ignacio Mastroleo, Anusha Klett et al.

Affiliation: University of Buenos Aires, Argentina; FLACSO, Argentina; Collaborative Research Institute Intelligent Oncology (CRIION), Freiburg im Breisgau, Germany

DOI: 10.17160/josha.11.1.975

Languages: English

Critical Review of “Ethics & Governance of Artificial Intelligence for Health” by the World Health Organization (WHO)

Rebecca Berger

Affiliation: Department of Internal Medicine, University Hospital Freiburg, Freiburg im Breisgau, Germany

DOI: 10.17160/josha.11.1.956

Languages: English

Insights into Tomorrow: Psychology's Current Transformations

Cinthya Souza Simas

Affiliation: Journal of Science, Humanities and Arts (JOSHA), Freiburg im Breisgau, Germany

DOI: 10.17160/josha.11.1.955

Languages: English

JOSHA’s Critical Review of “High Hopes for ‘Deep Medicine’? AI, Economics, and the Future of Care” by Robert Sparrow and Joshua Hatherley

Neher Aseem Parimoo, Ignacio Mastroleo, Roland Mertelsmann

Affiliation: Journal of Science, Humanities, and Arts, Freiburg im Breisgau, Germany

DOI: 10.17160/josha.10.6.910

Languages: English

Ein Beitrag zur Künstliche Intelligenz – A Contribution to Artificial Intelligence

Hans Burkhardt

Affiliation: Institute for Computer Science, University of Freiburg, Germany

DOI: 10.17160/josha.10.2.892

Languages: German

“JOSHA’s Critical Review of ‘How to Regulate Evolving AI Health Algorithms’ by David W. Bates”

Neher Aseem Parimoo, Ignacio Mastroleo, Roland Mertelsmann

Affiliation: Journal of Science, Humanities, and Arts, Freiburg im Breisgau, Germany

DOI: 10.17160/josha.10.2.880

Languages: English

Künstliche Intelligenz in der Krebstherapie - Artificial Intelligence in Cancer Therapy

Gabriel Kalweit, Maria Kalweit, Ignacio Mastroleo, Joschka Bödecker et al.

Affiliation: Collaborative Research Institute Intelligent Oncology (CRIION), Freiburg, Department of Computer Science, University of Freiburg

DOI: 10.17160/josha.10.2.875

Languages: German

Design Research for Healthcare

Anmol Anubhai

Affiliation: Seattle, Washington, United States of America

DOI: 10.17160/josha.10.2.854

Languages: English